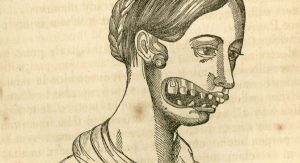

Last week I had the pleasure of being invited to participate in a ‘talk back’ session following a performance of Radium Girls with cast members and several trial lawyers who handle worker’s compensation cases. Put on at the Regent Theatre in Arlington by the Burlington Players, and sponsored by the Massachusetts Academy of Trial Attorneys, Radium Girls is a powerful play that traces the events of the mid-1920s as a group of 5 women who were employed by the U.S. Radium Corporation try to recover damages in court as they succumb to horrible ailments caused by radium poisoning. Radium, absorbed into the body in a way not dissimilar to calcium, wrecks havoc with bone tissue, often concentrating in the mouth and jaw. Causing dehabilitating, disfiguring and ultimately lethal injuries to many exposed to this susbtance–not unlike ‘phossy jaw‘ caused by exposure to phosphorus in the manufacture of matches–the legal system generally did not provide redress to workers for these types of injuries.

By way of background, the legal system in the early 19th century was ill-equipped to deal with the rapid technological, economic, social and other advances wrought by industrialization. Beginning in the 1820s in the U.K., and slightly later in the U.S, the advent of textile mills and other forms of industry created a sea-change in the way manufacturing was conducted. The legal system had centuries to adapt rules regarding traditional employee-employer relationships, most often known as master-servant law (you can access my two articles on this subject here, if desired), and these cases contemplated cottage industries where there were close personal working relationships between an employer and perhaps (at most) a handful of servants. There was something in the nature of reciprocal responsibilities between the parties– while tilted towards the employers, servants did have legal recourse for non-payment of wages, breach of the terms of contracts or indentures, mistreatment, and the like. With the advent of industrialization, suddenly there were factories employing hundreds and sometimes thousands of workers, and issues that seldom had to be dealt with before were becoming commonplace. For example, dams burst and flooded fields; steam boilers exploded and scalded or killed workers and steam boat passengers; employees had limbs horribly mangled in the cogs of industry; factories belched pollutants into the air and spewed effluvia into rivers; and locomotives–perhaps the most evident sign of progress–killed cattle, struck pedestrians crossing railway lines, and set fire to fields by spewing sparks into the air. Industrialization was seen as a significant, perhaps an overridding, social good– and Anglo-American legal regimes generally reflected that. So powerful were the forces of progress that they swept away entrenched, centuries-old legal principles that conflicted with them: my favorite example being the now-largely-forgotten law of deodand. Deodand was the ancient legal principle that if an animal or inanimate object occasioned the death of one of the King’s subjects, the item was forfeit to the Crown; over time this rule changed to commonly encompass ascribing a monetary figure to the object or animal instead, with that amount being transferred to the Crown or to the family of the deceased (a marvelous article on this topic, “The Deodand and Responsibility for Death”, may be found here). Somewhat predictably, it was that driver of industrialization known as the ‘railroad’ which was to prove that deodands had outlived their usefulness, as illustrated by the Sonning Cutting accident of 1841 in which 9 people were killed. The law of deodand was abolished formally by Parliament in 1846. It has been said to live on in the U.S. as the basis for the somewhat-related and contentious “civil forfeiture” or “asset forfeiture” principle.

Employees who sustained injuries on the job were generally barred from recovering for their injuries by what has been referred to as the ‘unholy trinity of defenses to compensation’. The first doctrine was assumption of risk— simply put, a worker was assumed to know the risks of employment, and to accept them, by virtue of accepting a wage. In theory, this rule posited, wages were adjusted to compensate for the level or risk and workers were always free to work elsewhere. Employers, for their part, were only required to provide the level of safety measures common to the industry as a whole– a ‘leveling to the bottom’ scenario that meant few safety precautions related to worker safety were taken. Workers were also frequently required to sign employment contracts in which they abdicated their right to sue, known not-so-affectionately as “right to die” clauses. The rule of contributory negligence held that if the worker was in any way responsible for his injuries, than the employer could not be held liable; while the fellow servant rule held that employers were not responsible for the actions of another employee– an injured employee had to seek compensation from the fellow employee directly. Predictably, the effect of these principles was to essentially preclude employees from gaining compensation. A poem that wonderfully captures the injustice of these rules is Edgar Lee Masters’s (1868-1950) poem “Butch Weldy”, found in Poetry of the Law edited by David Kader and Michael Stanford (University of Iowa Press, 2010) at 78:

After I got religion and steadied down

They gave me a job in the canning works,

And every morning I had to fill

The tank in the yard with gasoline,

That fed the blow-fires in the sheds

To heat the soldering irons.

And I mounted a rickety ladder to do it,

Carrying buckets full of the stuff.

One morning, as I stood there pouring,

The air grew still and seemed to heave,

And I shot up as the tank exploded,

And down I came with both legs broken,

And my eyes burned crisp as a couple of eggs

For someone left a blow-fire going,

And something sucked the flame in the tank.

The Circuit Judge said whoever did it

Was a fellow-servant of mine, and so

Old Rhodes’ son didn’t have to pay me.

And I sat on the witness stand as blind

as Jack the Fiddler, saying over and over,

“I didn’t know him at all.”

By the turn of the century, progress in the U.S. was evident. The earliest departures from these rules applied to railroads–which to this day have different statutory schemes governing worker’s compensation—with Congress in 1906 and 1908 passing legislation to soften the contributory negligence rule. Most work remained state-by-state, with the first comprehensive worker’s compensation scheme being enacted in Wisconsin in 1911 and the last in Mississippi in 1948. Meanwhile, injuries continued to mount; it was estimated that in 1900 there were 35,000 work-related deaths per year in the U.S. and some 2 million injuries. A gradual chipping-away at the law by jury awards, some legislative movement and a growing sense of the unfairness of many worker’s compensation regimes–not to mention the rise of the contingency fee structure that made legal services much more accessible to the working class–meant that over time these obstacles to worker’s compensation eroded.

These laws and cases, however, dealt with discrete, tangible, traumatic work injuries– they did not encompass, nor could they predict, the damaging effects of latent workplace injuries as exemplified by the experience of the Radium Girls or those exposed to phosphorus who contracted “phossy jaw” as mentioned earlier. In the later years of World War I and thereafter, companies such as U.S. Radium Corporation produced luminous watch dials and other items, using radium salt mixed with zinc sulfide to form a paint known as “Undark”. Young women moistened their paintbrushes in their mouths to keep a fine point as they painted watch faces, working day after day in poorly-ventilated factories where everything was coated with radioactive dust. To amuse their boyfriends, they painted their teeth and fingernails to produce an enticing glow-in-the-dark effect. And glow in the dark they did! At no time were they told that radium was dangerous, even while technicians and others protected themselves from radium’s effects. Predictably, many of these women succumbed to horrific ailments, including necrosis of the jaw (known as “radium jaw“). Five such women fought a lengthy and high-profile legal battle against the U.S. Radium Corporation in the 1920s, culminating in a settlement in 1928– all along the way U.S. Radium denied liability and even smeared the women’s reputation by publicly claiming they were infected with sylliphus, while also buying off dentists and doctors, using executives to pose as medical specialists, and using delaying tactics in court, among other unsavory practices. None of the five women lived more than a few years after the settlement, but the saga helped shape public and political opinion. In 1949 Congress expanded legislative protections for workers harmed by occupational diseases, and industrial safety standards were ratcheted up in the years following the Radium Girl’s struggle. While radium-based paint was used extensively in the World War II period and as late as the 1960s, further cases of radium jaw were avoided through the use of safety procedures and training– procedures and training that were far from onerous and indicate how easily these tragedies could have been avoided.

And as Eleanor Swanson writes about them in her poem “Radium Girls”: “Now, even our crumbling bones/will glow forever in the black earth”….